Security Monitoring in AWS: Cloudtrail, Cloudwatch and Eventbridge

April 10, 202411 minutes

💡 TLDR

In this post I’ll demonstrate how to setup a Security monitoring infrastructure in AWS. The main goal is to leverage AWS Cloudwatch, AWS Lambda and AWS Eventbridge for creating alerts based on specific event types from AWS Cloudtrail. First I’ll use Terraform to deploy all required resources and then I’ll implement a simple Golang based Lambda function to handle certain events.

Infrastructure

In my Documentation as Code for Cloud series I’ve described a fictional self-destructing email service which consists of several components, deployed across multiple accounts in AWS (you could do the same in Azure, GCP, etc.).

The big picture:

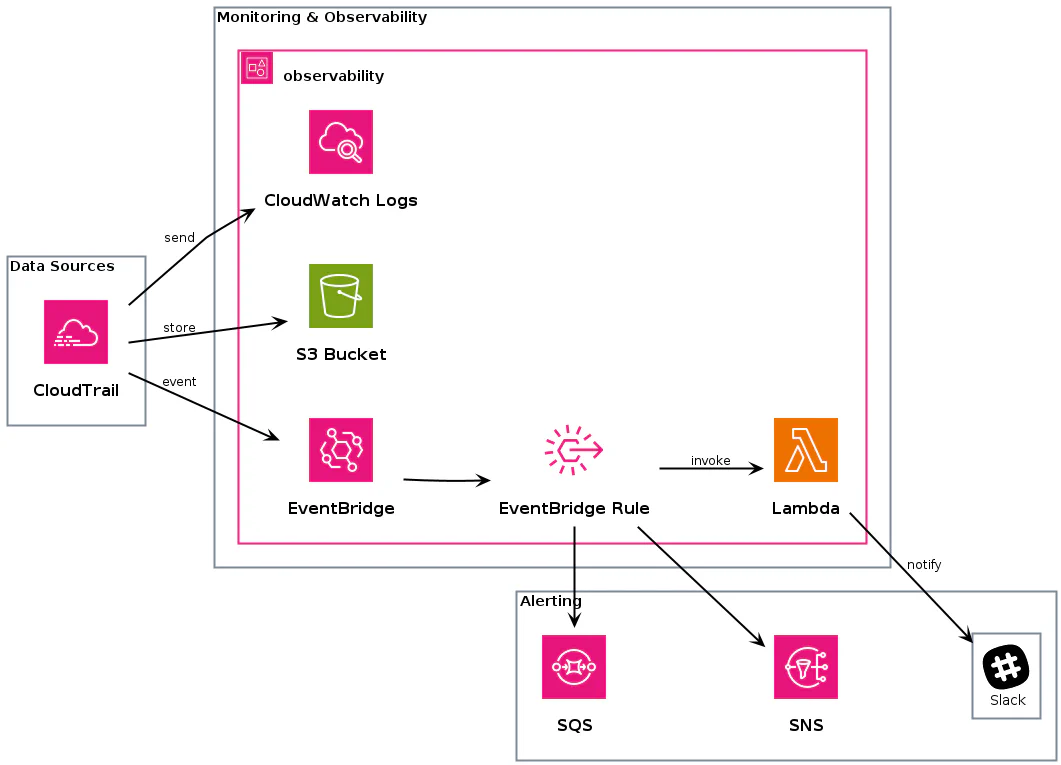

The PlantUML diagram describes a security monitoring infrastructure comprising three main components: Data Sources, Monitoring & Observability, and Alerting:

Data Sources: This section includes CloudTrail, which is a service that logs AWS API calls made on your account, providing visibility into actions taken within the AWS environment.

Monitoring & Observability: This section contains various tools and services for monitoring and observing the system. It includes CloudWatch Logs for log management and analysis, an S3 Bucket for storing logs and other data, EventBridge for event-driven architecture, EventBridge Rules for defining event routing, and Lambda for serverless computing.

Alerting: This section involves tools for alerting and notification. It includes Slack for real-time communication, SNS (Simple Notification Service) for sending notifications, and SQS (Simple Queue Service) for queuing messages.

The diagram also depicts relationships between these components:

- CloudTrail sends logs to S3 for storage and to EventBridge for event processing.

- CloudWatch receives logs from CloudTrail for monitoring and analysis.

- EventBridge Rules invoke Lambda functions for automated responses and can send messages to SQS and SNS for further processing.

- Finally, Lambda functions can directly notify Slack for alerting purposes.

Terraform

As a big fan of “Everything as Code” I’ll start by implementing IaC (Infrastructure as Code) in Terraform for activating CloudTrail, setting up the CloudWatch logs and the EventBridge rules.

I’ll first define the Terraform version I want to use:

1.7.5💡 Use tfenv to manage multiple versions of Terraform.

Bootstrap

Before creating the actual resources, I’ll first setup the remote state (using a S3 bucket in AWS) and therefore make sure the Terraform state file is managed safely:

provider "aws" {

region = "eu-central-1"

}

# Create S3 bucket

resource "aws_s3_bucket" "terraform_state" {

bucket = "defersec-tfstate"

lifecycle {

prevent_destroy = true

}

}

# Allow versioning

resource "aws_s3_bucket_versioning" "terraform_state" {

bucket = aws_s3_bucket.terraform_state.id

versioning_configuration {

status = "Enabled"

}

}(Optional): Additionally you can add state lock (which is recommended in an enterprise context with large state files) but for now I’ll just skip it:

# Use state lock in AWS DynamoDB

resource "aws_dynamodb_table" "terraform_state_lock" {

name = "app-state"

read_capacity = 20

write_capacity = 20

hash_key = "LockID"

attribute {

name = "LockID"

type = "S"

}

}I’ll use AWS as my main Terraform provider:

provider "aws" {

region = "eu-central-1"

profile = "aws-terraform"

default_tags {

tags = {

app = "cloudtrail-events-using-lambda-and-go"

managed_by = "terraform"

}

}

}Specify a certain version:

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.29.0"

}

}

}Save the Terraform state file in a S3 bucket:

terraform {

backend "s3" {

bucket = "defersec-tfstate"

key = "prod/terraform.tfstate"

region = "eu-central-1"

# You can specify an explicit AWS profile here

# profile = "aws-terraform"

}

}That’s it for the bootstrap. Now, let’s deep-dive into some Terraform modules.

Modules

Terraform modules are a type of encapsulation for multiple resources that are used together. They server as a way to package and reuse code but also to organize Terraform configurations.

There are different types of Terraform modules:

- Root Module: This is the main configuration directory where you run

terraformcommands. Every Terraform configuration has at least one root module. - Internal Modules: These are modules created for a single purpose, usually to be used from the root module

- Child Modules: These are modules created within other modules (e.g. inside your own local modules). You can either create your own child modules or source them from external locations, such as the Terraform Registry or other version control systems.

We’ll create some Terraform modules for better reusability.

⮕ Cloudtrail

The infrastructure resources will be created in a single account. In an enterprise (or even for bigger projects) you may want to use a multi-account organization in AWS.

I’ll put cloudtrail in it’s own module:

resource "aws_cloudtrail" "default" {

# Put these into variables

name = var.trail_name

s3_bucket_name = var.trail_bucket

# Create trail in organization master account?

is_organization_trail = false

# Use a single S3 bucket for all AWS regions

is_multi_region_trail = true

# Add global service events

include_global_service_events = true

# Send logs to CloudWatch Logs

cloud_watch_logs_group_arn = "${aws_cloudwatch_log_group.cloudwatch_log_group.arn}:*"

cloud_watch_logs_role_arn = aws_iam_role.cloudtrail_cloudwatch_role.arn

# We also want Data Events for certain services (such as S3 objects)

event_selector {

read_write_type = "All"

include_management_events = true

# TODO: Create S3 bucket GitOps style

data_resource {

type = "AWS::S3::Object"

values = ["arn:aws:s3:::s3-poc-lambda-golang-eventbridge-cloudtrail/"]

}

# You can also exclude certain events

# exclude_management_event_sources = [

# "kms.amazonaws.com",

# "rdsdata.amazonaws.com"

# ]

}

depends_on = [

aws_s3_bucket.cloudtrail_bucket

]

}Define the providers (I’ll mainly use AWS):

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.29.0"

}

}

}Now let’s specify some variables:

variable "trail_name" {

description = "AWS CloudTrail Name"

}

variable "trail_bucket" {

description = "AWS CloudTrail S3 Bucket to store log data"

}

variable "cloudwatch_log_group_name" {

description = "CloudWatch Log group name for the Cloudtrail logs"

type = string

}

variable "cloudwatch_log_retention_days" {

description = "The retention length for the Cloudwatch logs"

default = 5

type = number

}Create the Cloudwatch log group:

resource "aws_cloudwatch_log_group" "cloudwatch_log_group" {

name = var.cloudwatch_log_group_name

retention_in_days = var.cloudwatch_log_retention_days

}Next we continue creating IAM related resources. But first let’s fetch some information regarding the current environment/setup:

# The AWS account id

data "aws_caller_identity" "current" {}

# The AWS region currently being used.

data "aws_region" "current" {}

# The AWS partition

data "aws_partition" "current" {}

# The currrent organization

# data "aws_organizations_organization" "current" {}Allow Cloudtrail to assume this IAM role:

# Cloudtrail assume role

data "aws_iam_policy_document" "cloudtrail_assume_role" {

statement {

effect = "Allow"

actions = ["sts:AssumeRole"]

principals {

type = "Service"

identifiers = ["cloudtrail.amazonaws.com"]

}

}

}

# This role is used by CloudTrail to send logs to CloudWatch.

resource "aws_iam_role" "cloudtrail_cloudwatch_role" {

name = "CloudtrailIAMRole"

assume_role_policy = data.aws_iam_policy_document.cloudtrail_assume_role.json

}Let’s add some IAM policies to the role: Allow Cloudtrail to send logs to AWS Cloudwatch

# Allow Cloudtrail to send logs to Cloudwatch

data "aws_iam_policy_document" "cloudtrail_cloudwatch_logs" {

statement {

sid = "WriteCloudWatchLogs"

effect = "Allow"

actions = [

"logs:CreateLogStream",

"logs:PutLogEvents",

]

resources = ["arn:${data.aws_partition.current.partition}:logs:${data.aws_region.current.name}:${data.aws_caller_identity.current.account_id}:log-group:${var.cloudwatch_log_group_name}:*"]

}

}

# Policy to be attached to the role

resource "aws_iam_policy" "cloudtrail_cloudwatch_logs" {

name = "CloudtrailCloudwatchLogsPolicy"

policy = data.aws_iam_policy_document.cloudtrail_cloudwatch_logs.json

}

# Attach the policy to a role

resource "aws_iam_policy_attachment" "attach_policy_logs" {

name = "CloudtrailCloudwatchLogsPolicy-attachment"

policy_arn = aws_iam_policy.cloudtrail_cloudwatch_logs.arn

roles = [aws_iam_role.cloudtrail_cloudwatch_role.name]

}Allow Cloudtrail to put logs into S3 bucket:

# Bucket policy for the Cloudtrail S3 bucket

data "aws_iam_policy_document" "cloudtrail_bucket_policy" {

# Allow to fetch bucket ACLs

statement {

sid = "AWSCloudTrailAclCheck"

effect = "Allow"

principals {

type = "Service"

identifiers = ["cloudtrail.amazonaws.com"]

}

actions = ["s3:GetBucketAcl"]

resources = [

"arn:aws:s3:::${var.trail_bucket}",

]

condition {

test = "StringEquals"

variable = "aws:SourceArn"

values = ["arn:${data.aws_partition.current.partition}:cloudtrail:${data.aws_region.current.name}:${data.aws_caller_identity.current.account_id}:trail/${var.trail_name}}"]

}

}

# Allow Cloudtrail to put logs into S3 bucket

statement {

sid = "AWSCloudTrailWriteAccount"

effect = "Allow"

principals {

type = "Service"

identifiers = ["cloudtrail.amazonaws.com"]

}

actions = ["s3:PutObject"]

resources = ["arn:aws:s3:::${var.trail_bucket}/AWSLogs/${data.aws_caller_identity.current.account_id}/*"]

# Conditions

condition {

test = "StringEquals"

variable = "s3:x-amz-acl"

values = ["bucket-owner-full-control"]

}

condition {

test = "StringEquals"

variable = "AWS:SourceArn"

values = ["arn:aws:cloudtrail:${data.aws_region.current.name}:${data.aws_caller_identity.current.account_id}:trail/${var.trail_name}"]

}

}

}(Optional): If you use AWS organizations you might want Cloudtrail to put logs for the whole organization:

statement {

sid = "AWSCloudTrailWriteOrganization"

effect = "Allow"

principals {

type = "Service"

identifiers = ["cloudtrail.amazonaws.com"]

}

actions = ["s3:PutObject"]

resources = ["arn:aws:s3:::${var.trail_bucket}/AWSLogs/${data.aws_organizations_organization.current.id}/*"]

# Conditions

condition {

test = "StringEquals"

variable = "s3:x-amz-acl"

values = ["bucket-owner-full-control"]

}

condition {

test = "StringEquals"

variable = "AWS:SourceArn"

values = ["arn:aws:cloudtrail:${data.aws_region.current.name}:${data.aws_caller_identity.current.account_id}:trail/${var.trail_name}"]

}

}Then create the S3 bucket where to put logs to:

# Create S3 bucket

resource "aws_s3_bucket" "cloudtrail_bucket" {

bucket = var.trail_bucket

force_destroy = true

}

# Enable server side encryption (SSE)

resource "aws_s3_bucket_server_side_encryption_configuration" "sse_s3_bucket" {

bucket = aws_s3_bucket.cloudtrail_bucket.id

rule {

apply_server_side_encryption_by_default {

sse_algorithm = "aws:kms"

}

}

}

resource "aws_s3_bucket_policy" "s3_bucket_policy" {

bucket = aws_s3_bucket.cloudtrail_bucket.id

policy = data.aws_iam_policy_document.cloudtrail_bucket_policy.json

}

# Block all public access to the bucket

resource "aws_s3_bucket_public_access_block" "block_public_access" {

bucket = aws_s3_bucket.cloudtrail_bucket.id

block_public_acls = true

block_public_policy = true

restrict_public_buckets = true

ignore_public_acls = true

}Finally some outputs:

output "cloudtrail_instance" {

description = "Cloudtrail instance"

value = aws_cloudtrail.default

}

output "cloudtrail_s3_bucket" {

description = "Cloudtrail S3 bucket"

value = aws_s3_bucket.cloudtrail_bucket

}⮕ EventBridge

Now we’ll setup different modules for EventBride.

⚠ The options for Eventbridge are quite numerous. Check out the Github repo for the whole list.

The block below configures Eventbridge to capture DATA events (related to S3 buckets) and send them to a SNS topic:

# Create Eventbridge rule

module "eventbridge" {

source = "terraform-aws-modules/eventbridge/aws"

create_bus = false

rules = {

s3_put_object = {

description = "Triggers when objects are uploaded to certain S3 bucket"

event_pattern = jsonencode({

"source": ["aws.s3"],

"detail-type": ["AWS API Call via CloudTrail"],

"detail": {

"eventSource": ["s3.amazonaws.com"],

"eventName": ["PutObject", "CompleteMultipartUpload"],

"requestParameters": {

"bucketName": [var.s3_bucket_name]

},

}

})

}

}

targets = {

s3_put_object = {

example_target = {

arn = aws_sns_topic.sns_topic.arn

name = "send-s3-put-events-to-sns-topic"

}

}

}

}

# Create SNS topic

resource "aws_sns_topic" "sns_topic" {

name = var.sns_topic

}Finally we define the variables for this module:

variable "sns_topic" {

description = "Name for the SNS topic"

default = "poc-lambda-golang-eventbridge-cloudtrail"

}

variable "s3_bucket_name" {

description = "Name of the S3 bucket"

default = "s3-poc-lambda-golang-eventbridge-cloudtrail"

}⮕ Root Module

In the root module (especially main.tf) we glue everything togeter and use our internal modules:

Cloudtrail

# Create Cloudtrail

module "aws-cloudtrail" {

source = "./modules/aws-cloudtrail"

# Provide parameters to module

trail_name = var.trail_name

trail_bucket = var.trail_bucket

cloudwatch_log_group_name = var.cloudwatch_log_group_name

}Let’s define the variables:

variable "trail_name" {

description = "AWS CloudTrail Name"

default = "poc-lambda-golang-eventbridge-cloudtrail"

}

variable "trail_bucket" {

description = "AWS CloudTrail S3 Bucket to store log data"

default = "poc-lambda-golang-eventbridge-cloudtrail"

}

variable "cloudwatch_log_group_name" {

description = "AWS Cloudwatch log group"

default = "/security/logs/cloudtrail"

}Eventbridge

# Create Cloudtrail

module "aws-eventbridge-sns" {

source = "./modules/aws-eventbridge/sns"

sns_topic = var.sns_topic

s3_bucket_name = var.s3_bucket_name

}variable "sns_topic" {

description = "The SNS topic"

default = "poc-lambda-golang-eventbridge-cloudtrail"

}

variable "s3_bucket_name" {

description = "Name of the S3 bucket"

default = "s3-poc-lambda-golang-eventbridge-cloudtrail"

}Deployment

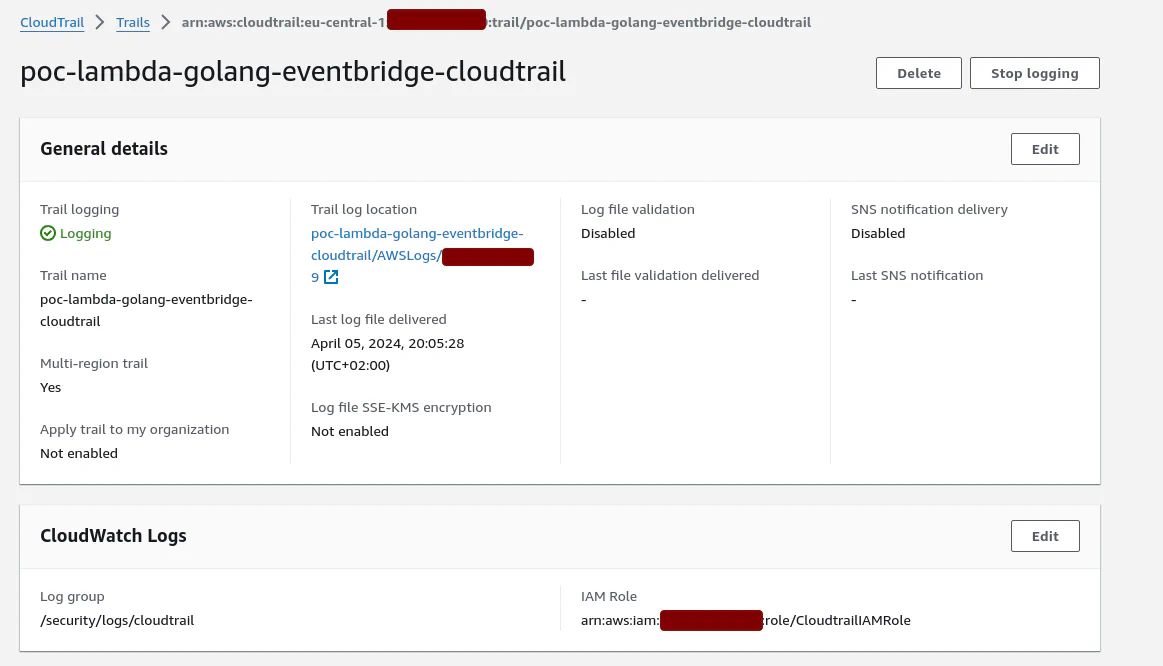

After successful deployment we should have Cloudtrail enabled:

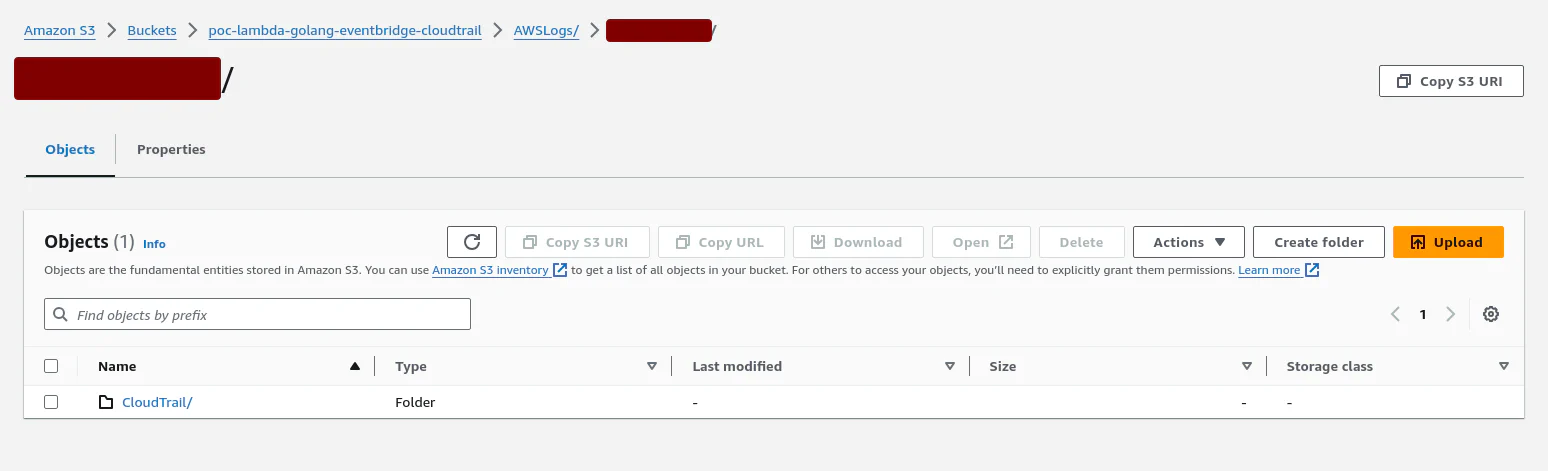

We have a S3 bucket with the log files:

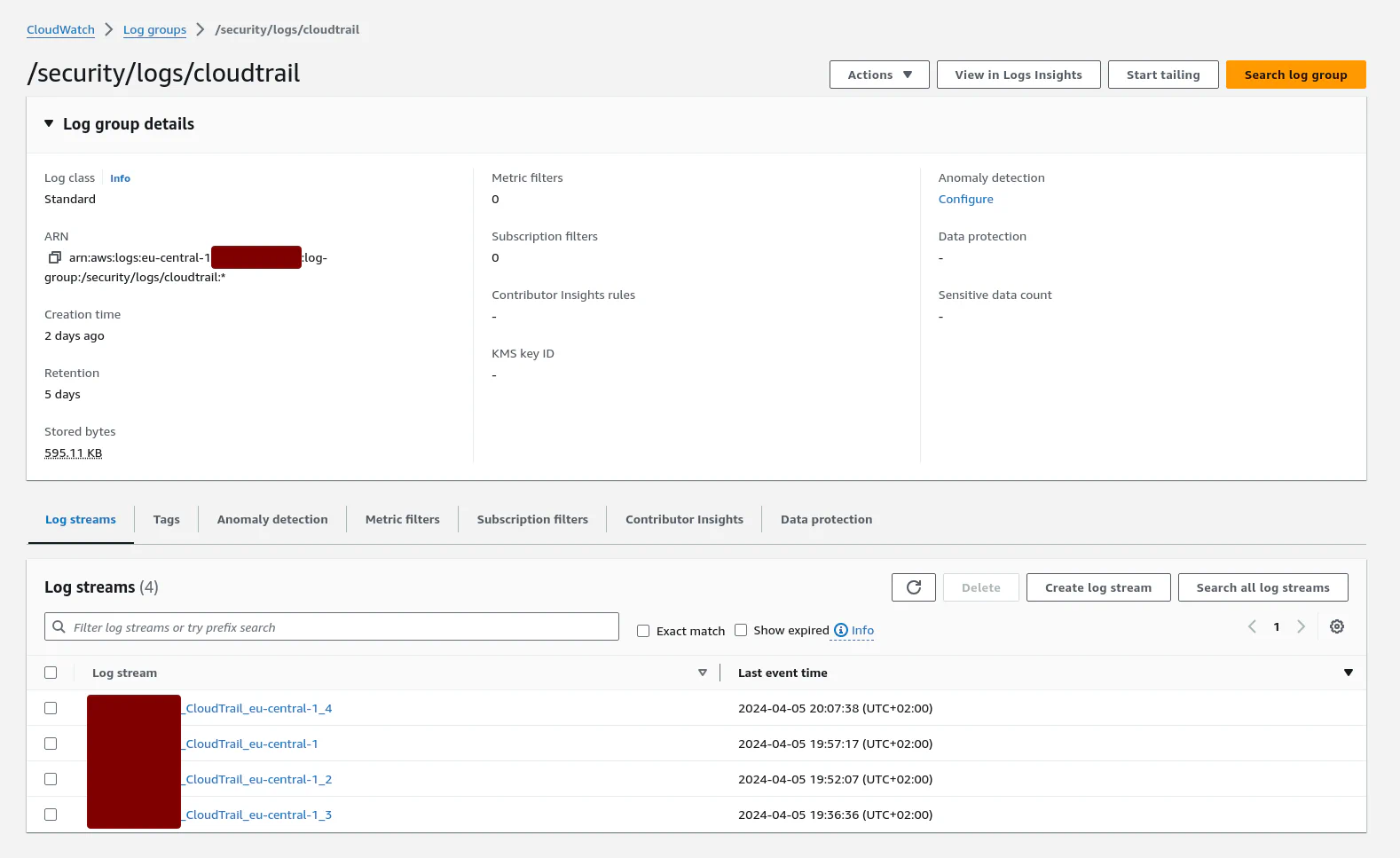

There is a Cloudwatch log group:

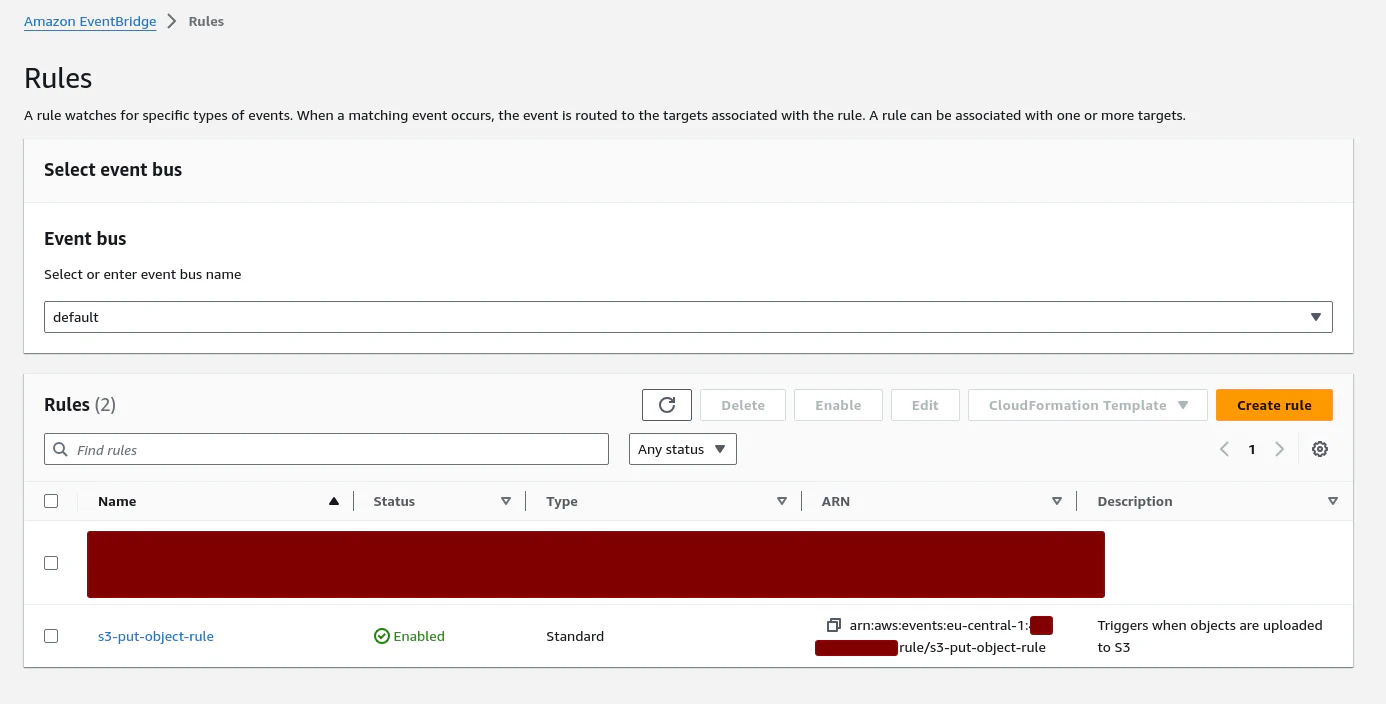

The Eventbridge rule has also been created:

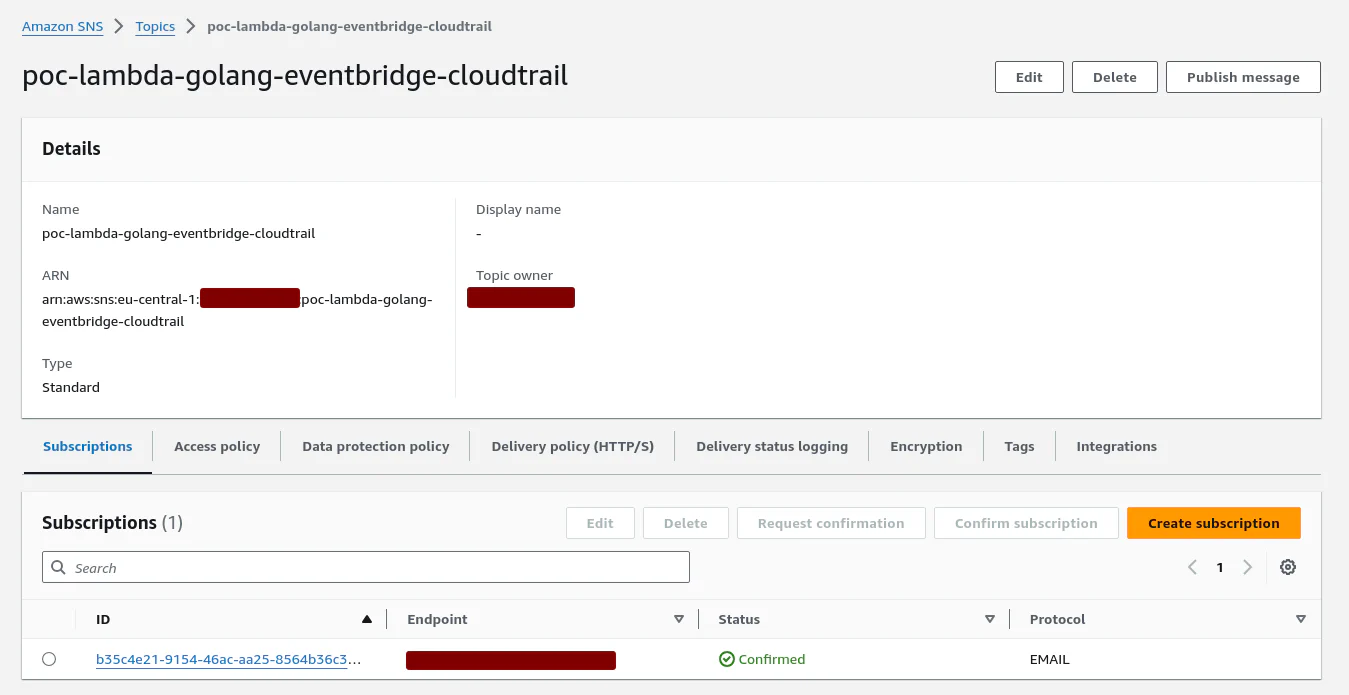

The SNS topic has been created and there is an e-mail subscription (which I’ve created manually):

Testing

Now let’s test the Eventbridge rule and upload a file to the S3 bucket s3-poc-lambda-golang-eventbridge-cloudtrail:

$ aws s3 cp file.txt s3://s3-poc-lambda-golang-eventbridge-cloudtrail/

upload: ./file.txt to s3://s3-poc-lambda-golang-eventbridge-cloudtrail/file.txtThen you should get a notification (via E-Mail) with following content:

{

"version": "0",

"id": "02612a4d-bbcb-8050-8bc9-0ce5aa166b5b",

"detail-type": "AWS API Call via CloudTrail",

"source": "aws.s3",

"account": "xxxxxxxxxxxx",

"time": "2024-04-10T17:32:47Z",

"region": "eu-central-1",

"resources": [],

"detail": {

"eventVersion": "1.09",

"userIdentity": {

"type": "IAMUser",

"principalId": "xxxxxxxxxxxxxxxxxxxxx",

"arn": "arn:aws:iam::xxxxxxxxxxxx:user/xxxxxxxx",

"accountId": "xxxxxxxxxxxx",

"accessKeyId": "xxxxxxxxxxxxxxxxxxxx",

"userName": "xxxxxxxx"

},

"eventTime": "2024-04-10T17:32:47Z",

"eventSource": "s3.amazonaws.com",

"eventName": "PutObject",

"awsRegion": "eu-central-1",

"sourceIPAddress": "xxxxxxxxxxxx",

"userAgent": "[aws-cli/2.15.19 Python/3.11.8]",

"requestParameters": {

"bucketName": "s3-poc-lambda-golang-eventbridge-cloudtrail",

"Host": "s3-poc-lambda-golang-eventbridge-cloudtrail.s3.eu-central-1.amazonaws.com",

"key": "file.txt"

},

"responseElements": {

"x-amz-server-side-encryption": "AES256"

},

"additionalEventData": {

"SignatureVersion": "SigV4",

"CipherSuite": "ECDHE-RSA-AES128-GCM-SHA256",

"bytesTransferredIn": 926,

"SSEApplied": "Default_SSE_S3",

"AuthenticationMethod": "AuthHeader",

"x-amz-id-2": "gYxZ55RgLuT+NtnG0jG9uSm3TBP2ZgzxyXQKkKh/x84hG3KkcCjR41DDY8jNcepL2EoxHM4espCnH89VXaU1iw==",

"bytesTransferredOut": 0

},

"requestID": "H0EC27Z64SE4FCCX",

"eventID": "91756431-xxxxxxxxxxxxxxxxxxxxxxxxxxx",

"readOnly": false,

"resources": [

{

"type": "AWS::S3::Object",

"ARN": "arn:aws:s3:::s3-poc-lambda-golang-eventbridge-cloudtrail/file.txt"

},

{

"accountId": "xxxxxxxxxxxx",

"type": "AWS::S3::Bucket",

"ARN": "arn:aws:s3:::s3-poc-lambda-golang-eventbridge-cloudtrail"

}

],

"eventType": "AwsApiCall",

"managementEvent": false,

"recipientAccountId": "xxxxxxxxxxxx",

"eventCategory": "Data",

"tlsDetails": {

"tlsVersion": "TLSv1.2",

"cipherSuite": "ECDHE-RSA-AES128-GCM-SHA256",

"clientProvidedHostHeader": "s3-poc-lambda-golang-eventbridge-cloudtrail.s3.eu-central-1.amazonaws.com"

}

}

}Outlook

In the next post I’ll show how to trigger a Lambda function via EventBridge. Instead of sending the whole event to SNS, we might want to implement some custom logic inside the AWS Lambda.

Resources

Here is a list of additional, maybe helpful resources: